As a child, I liked to reply to my mother that only a genius could master chaos when she told me to tidy my room. A very welcome excuse to shirk my responsibilities. When I started an apprenticeship in a trade after finishing school, the first thing my master craftsman emphasized was: keeping things tidy. Tools had to be put back in their bags after use, opened boxes of the same materials had to be refilled, and of course, there was also the need to sweep up several times a day. I can say right away that I never perceived these things as harassment, even if they seemed annoying at first. Because we quickly learned the benefits of the motto “keep things clean.”

Tools that are always put back in their place give us a quick overview of whether anything is missing. So we can then go looking for it, and the likelihood of things being stolen is drastically reduced. With work materials, too, you maintain a good overview of what’s been used up and what needs to be replaced. Five empty boxes containing only one or two items not only take up space but also lead to miscalculations of available resources. Finally, it’s also true that one feels less comfortable in a dirty environment, and cleanliness demonstrates to the client that one works in a focused and planned manner.

Due to this early experience, when the concept of Clean Desk was introduced as a security measure in companies a few years ago, I didn’t immediately understand what was expected of me. After all, the Clean Desk principle had been second nature to me long before I completed my computer science degree. But let’s start at the beginning. First, let’s look at what Clean Desk actually is and how to implement it.

Anyone who delves deeply into the topic of security learns one of the first things they learn: most successful attacks aren’t carried out using complicated technical maneuvers. They’re much more mundane and usually originate from within, not from the outside. True to the adage, opportunity makes the thief. When you combine this fact with the insights of social engineering, a field primarily shaped by the hacker Kevin Mitnick, a new picture emerges. It’s not always necessary to immediately place your own employees under suspicion. In a building, there are external cleaning staff, security personnel, or tradespeople who usually have easy access to sensitive areas. Therefore, the motto should always be: trust is good, but control is better, which is why a Clean Desk Policy is implemented.

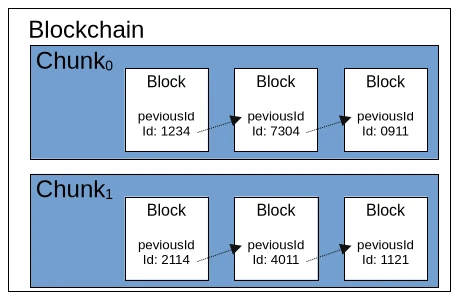

The first rule is: anyone leaving their workstation for an extended period must switch off their devices. This applies especially at the end of the workday. Otherwise, at least the desktop should be locked. The concept behind this is quite simple: Security vulnerabilities cannot be exploited from switched-off devices to hack into the company network from the outside. Furthermore, it reduces power consumption and prevents fires caused by short circuits. To prevent the devices from being physically stolen, they are secured to the desk with special locks. I’ve personally experienced devices being stolen during lunch breaks.

Since I myself have stayed in hotels a lot, my computer’s hard drive is encrypted as a matter of course. This also applies to all external storage devices such as USB sticks or external SSDs. If the device is stolen, at least no one can access the data stored on it.

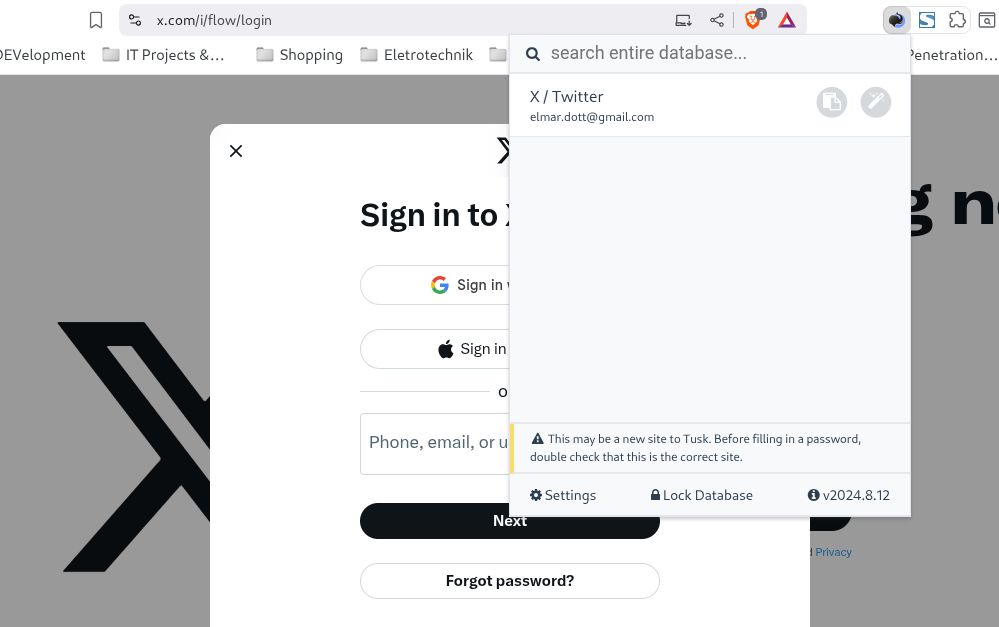

It goes without saying that secure encryption is only possible with a strong password. Many companies have specific rules that employee passwords must meet. It’s also common practice to assign a new password every 30 to 90 days, and this new password must be different from the last three used.

It’s often pointed out that passwords shouldn’t be written on a sticky note stuck to the monitor. I’ve never personally experienced this. It’s much more typical for passwords to be written under the keyboard or mousepad.

Another aspect to consider is notes left on desks, wall calendars, and whiteboards. Even seemingly insignificant information can be quite valuable. Since it’s rather difficult to decide what truly needs protecting and what doesn’t, the general rule is: all notes should be stored securely at the end of the workday, inaccessible to outsiders. Of course, this only works if lockable storage space is available. In sensitive sectors like banking and insurance, the policy even goes so far as to prohibit colleagues from entering their vacation dates on wall calendars.

Of course, these considerations also include your own wastebasket. It’s essential to ensure that confidential documents are disposed of in specially secured containers. Otherwise, the entire effort to maintain confidentiality becomes pointless if you can simply pull them out of the trash after work.

But the virtual desktop is also part of the Clean Desk Policy. Especially in times of virtual video conferences and remote work, strangers can easily catch a glimpse of your workspace. This reminds me of my lecture days when a professor had several shortcuts to the trash on his desktop. We always joked that he was recycling. Separate trash folders for Word, Excel, etc. files.

The Clean Desk Policy has other effects as well. It’s much more than just a security concept. Employees who consistently implement this policy also bring more order to their thoughts and can thus work through tasks one by one with greater focus, leading to improved performance. Personal daily planning is usually structured so that all started tasks can be completed by the end of the workday. This is similar to the trades. Tradespeople also try to complete their jobs by the end of the workday to avoid having to return for a short time the next day. A considerable amount of time is spent on preparation.

Implementing a Clean Desk Policy follows the three Ps (Plan, Protect & Pick). At the beginning of the day, employees decide which tasks need to be completed (Plan), and select the corresponding documents and necessary materials for easy access. At the end of the day, everything is securely stored. During working hours, it must also be ensured that no unauthorized persons have access to information, for example, during breaks. This daily, easy-to-implement routine of preparation and follow-up quickly becomes a habit, and the time required can be reduced to just a few minutes, so that hardly any work time is wasted.

With a Clean Desk Policy, the overwhelming piles of paper disappear from your desk, and by considering which tasks need to be completed each day, you can focus better on them, which significantly improves productivity. At the end of the day, you can also mentally cross some items off your to-do list, leading to greater satisfaction.