By experience, most of us know how difficult it is to express what we mean talking about quality. Why is that so? There exist many different views on quality and every one of them has its importance. What has to be defined for our project is something that fits its needs and works with the budget. Trying to reach perfectionism can be counterproductive if a project is to be terminated successfully. We will start based on a research paper written by B. W. Boehm in 1976 called “Quantitative evaluation of software quality.” Boehm highlights the different aspects of software quality and the right context. Let’s have a look more deeply into this topic.

When we discuss quality, we should focus on three topics: code structure, implementation correctness, and maintainability. Many managers just care about the first two aspects, but not about maintenance. This is dangerous because enterprises will not invest in individual development just to use the application for only a few years. Depending on the complexity of the application the price for creation could reach hundreds of thousands of dollars. Then it’s understandable that the expected business value of such activities is often highly estimated. A lifetime of 10 years and more in production is very typical. To keep the benefits, adaptions will be mandatory. That implies also a strong focus on maintenance. Clean code doesn’t mean your application can simply change. A very easily understandable article that touches on this topic is written by Dan Abramov. Before we go further on how maintenance could be defined we will discuss the first point: the structure.

Scaffolding Your Project

An often underestimated aspect in development divisions is a missing standard for project structures. A fixed definition of where files have to be placed helps team members find points of interests quickly. Such a meta-structure for Java projects is defined by the build tool Maven. More than a decade ago, companies tested Maven and readily adopted the tool to their established folder structure used in the projects. This resulted in heavy maintenance tasks, given the reason that more and more infrastructure tools for software development were being used. Those tools operate on the standard that Maven defines, meaning that every customization affects the success of integrating new tools or exchanging an existing tool for another.

Another aspect to look at is the company-wide defined META architecture. When possible, every project should follow the same META architecture. This will reduce the time it takes a new developer to join an existing team and catch up with its productivity. This META architecture has to be open for adoptions which can be reached by two simple steps:

- Don’t be concerned with too many details;

- Follow the KISS (Keep it simple, stupid.) principle.

A classical pattern that violates the KISS principle is when standards heavily got customized. A very good example of the effects of strong customization is described by George Schlossnagle in his book “Advanced PHP Programming.” In chapter 21 he explains the problems created for the team when adopting the original PHP core and not following the recommended way via extensions. This resulted in the effect that every update of the PHP version had to be manually manipulated to include its own development adaptations to the core. In conjunction, structure, architecture, and KISS already define three quality gates, which are easy to implement.

The open-source project TP-CORE, hosted on GitHub, concerns itself with the afore-mentioned structure, architecture, and KISS. There you can find their approach on how to put it in practice. This small Java library rigidly defined the Maven convention with his directory structure. For fast compatibility detection, releases are defined by semantic versioning. The layer structure was chosen as its architecture and is fully described here. Examination of their main architectural decisions concludes as follows:

Each layer is defined by his own package and the files following also a strict rule. No special PRE or POST-fix is used. The functionality Logger, for example, is declared by an interface called Logger and the corresponding implementation LogbackLogger. The API interfaces can detect in the package “business” and the implementation classes located in the package “application.” Naming like ILogger and LoggerImpl should be avoided. Imagine a project that was started 10 years ago and the LoggerImpl was based on Log4J. Now a new requirement arises, and the log level needs to be updated during run time. To solve this challenge, the Log4J library could be replaced with Logback. Now it is understandable why it is a good idea to name the implementation class like the interface, combined with the implementation detail: it makes maintenance much easier! Equal conventions can also be found within the Java standard API. The interface List is implemented by an ArrayList. Obviously, again the interface is not labeled as something like IList and the implementation not as ListImpl .

Summarizing this short paragraph, a full measurement rule set was defined to describe our understanding of structural quality. By experience, this description should be short. If other people can easily comprehend your intentions, they willingly accept your guidance, deferring to your knowledge. In addition, the architect will be much faster in detecting rule violations.

Measure Your Success

The most difficult part is to keep a clean code. Some advice is not bad per se, but in the context of your project, may not prove as useful. In my opinion, the most important rule would be to always activate the compiler warning, no matter which programming language you use! All compiler warnings will have to be resolved when a release is prepared. Companies dealing with critical software, like NASA, strictly apply this rule in their projects resulting in utter success.

Coding conventions about naming, line length, and API documentation, like JavaDoc, can be simply defined and observed by tools like Checkstyle. This process can run fully automated during your build. Be careful; even if the code checkers pass without warnings, this does not mean that everything is working optimally. JavaDoc, for example, is problematic. With an automated Checkstyle, it can be assured that this API documentation exists, although we have no idea about the quality of those descriptions.

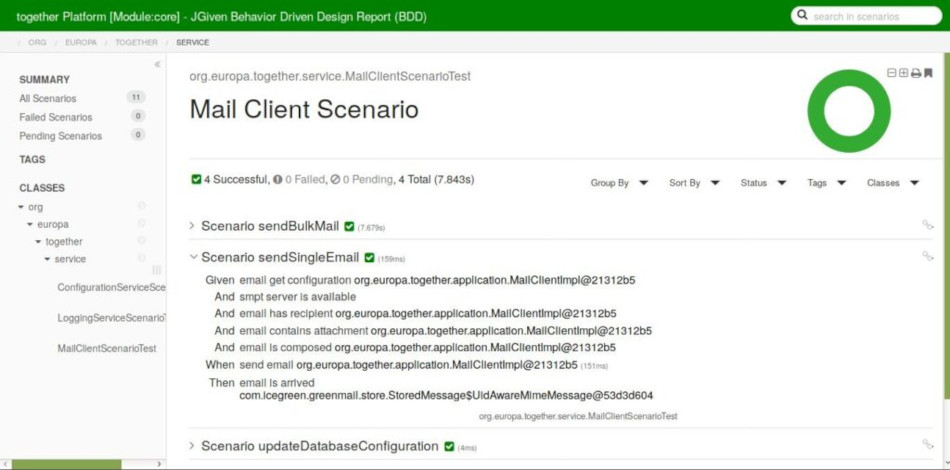

There should be no need to discuss the benefits of testing in this case; let us rather take a walkthrough of test coverage. The industry standard of 85% of covered code in test cases should be followed because coverage at less than 85% will not reach the complex parts of your application. 100% coverage just burns down your budget fast without resulting in higher benefits. A prime example of this is the TP-CORE project, whose test coverage is mostly between 92% to 95%. This was done to see real possibilities.

As already explained, the business layer contains just interfaces, defining the API. This layer is explicitly excluded from the coverage checks. Another package is called internal and it contains hidden implementations, like the SAX DocumentHandler. Because of the dependencies the DocumentHandler is bound to, it is very difficult to test this class directly, even with Mocks. This is unproblematic given that the purpose of this class is only for internal usage. In addition, the class is implicitly tested by the implementation using the DocumentHandler. To reach higher coverage, it also could be an option to exclude all internal implementations from checks. But it is always a good idea to observe the implicit coverage of those classes to detect aspects you may be unaware of.

Besides the low-level unit tests, automated acceptance tests should also be run. Paying close attention to these points may avoid a variety of problems. But never trust those fully automated checks blindly! Regularly repeated manual code inspections will always be mandatory, especially when working with external vendors. In our talk at JCON 2019, we demonstrated how simply test coverage could be faked. To detect other vulnerabilities you can additionally run checkers like SpotBugs and others more.

Tests don’t indicate that an application is free of failures, but they indicate a defined behavior for implemented functionality.

For a while now, SCM suites like GitLab or Microsoft Azure support pull requests, introduced long ago in GitHub. Those workflows are nothing new; IBM Synergy used to apply the same technique. A Build Manager was responsible to merge the developers’ changes into the codebase. In a rapid manner, all the revisions performed by the developer are just added into the repository by the Build Manager, who does not hold a sufficiently profound knowledge to decide about the implementation quality. It was the usual practice to simply secure that the build is not broken and always the compile produce an artifact.

Enterprises have discovered this as a new strategy to handle pull requests. Now, managers often make the decision to use pull requests as a quality gate. In my personal experience, this slows down productivity because it takes time until the changes are available in the codebase. Understanding of the branch and merge mechanism helps you to decide for a simpler branch model, like release branch lines. On those branches tools like SonarQube operate to observe the overall quality goal.

If a project needs an orchestrated build, with a defined order how artifacts have to create, you have a strong hint for a refactoring.

The coupling between classes and modules is often underestimated. It is very difficult to have an automated visualization for the bindings of modules. You will find out very fast the effect it has when a light coupling is violated because of an increment of complexity in your build logic.

Repeat Your Success

Rest assured, changes will happen! It is a challenge to keep your application open for adjustments. Several of the previous recommendations have implicit effects on future maintenance. A good source quality simplifies the endeavor of being prepared. But there is no guarantee. In the worst cases the end of the product lifecycle, EOL is reached, when mandatory improvements or changes cannot be realized anymore because of an eroded code base, for example.

As already mentioned, light coupling brings with it numerous benefits with respect to maintenance and reutilization. To reach this goal is not that difficult as it might look. In the first place, try to avoid as much as possible the inclusion of third-party libraries. Just to check if a String is empty or NULL it is unnecessary to depend on an external library. These few lines are fast done by oneself. A second important point to be considered in relation to external libraries: “Only one library to solve a problem.” If your project deals with JSON then decide one one implementation and don’t incorporate various artifacts. These two points heavily impact on security: a third-party artifact we can avoid using will not be able to cause any security leaks.

After the decision is taken for an external implementation, try to cover the usage in your project by applying design patterns like proxy, facade, or wrapper. This allows for a replacement more easily because the code changes are not spread around the whole codebase. You don’t need to change everything at once if you follow the advice on how to name the implementation class and provide an interface. Even though a SCM is designed for collaboration, there are limitations when more than one person is editing the same file. Using a design pattern to hide information allows you an iterative update of your changes.

Conclusion

As we have seen: a nonfunctional requirement is not that difficult to describe. With a short checklist, you can clearly define the important aspects for your project. It is not necessary to check all points for every code commit in the repository, this would with all probability just elevate costs and doesn’t result in higher benefits. Running a full check around a day before the release represents an effective solution to keep quality in an agile context and will help recognizing where optimization is necessary. Points of Interests (POI) to secure quality are the revisions in the code base for a release. This gives you a comparable statistic and helps increasing estimations.

Of course, in this short article, it is almost impossible to cover all aspects regarding quality. We hope our explanation helps you to link theory by examples to best practice. In conclusion, this should be your main takeaway: a high level of automation within your infrastructure, like continuous integration, is extremely helpful, but doesn’t prevent you from manual code reviews and audits.

Checklist

- Follow common standards

- KISS – keep it simple, stupid!

- Equal directory structure for different projects

- Simple META architecture, which can reuse as much as possible in other projects

- Defined and follow coding styles

- If a release got prepared – no compiler warnings are accepted

- Have test coverage up to 85%

- Avoid third-party libraries as much as possible

- Don’t support more than one technology for a specific problem (e. g., JSON)

- Cover foreign code by a design pattern

- Avoid strong object/module coupling