Tag Archives: Linux

Spring Cleaning for Docker

Anyone interested in this somewhat specialized article doesn’t need an explanation of what Docker is and what this virtualization tool is used for. Therefore, this article is primarily aimed at system administrators, DevOps engineers, and cloud developers. For those who aren’t yet completely familiar with the technology, I recommend our Docker course: From Zero to Hero.

In a scenario where we regularly create new Docker images and instantiate various containers, our hard drive is put under considerable strain. Depending on their complexity, images can easily reach several hundred megabytes to gigabytes in size. To prevent creating new images from feeling like downloading a three-minute MP3 with a 56k modem, Docker uses a build cache. However, if there’s an error in the Dockerfile, this build cache can become quite bothersome. Therefore, it’s a good idea to clear the build cache regularly. Old container instances that are no longer in use can also lead to strange errors. So, how do you keep your Docker environment clean?

While docker rm <container-nane> and docker rmi <image-id> will certainly get you quite far, in build environments like Jenkins or server clusters, this strategy can become a time-consuming and tedious task. But first, let’s get an overview of the overall situation. The command docker system df will help us with this.

root:/home# docker system df

TYPE TOTAL ACTIVE SIZE RECLAIMABLE

Images 15 9 5.07GB 2.626GB (51%)

Containers 9 7 11.05MB 5.683MB (51%)

Local Volumes 226 7 6.258GB 6.129GB (97%)

Build Cache 0 0 0B 0BBefore I delve into the details, one important note: The commands presented are very efficient and will irrevocably delete the corresponding areas. Therefore, only use these commands in a test environment before using them on production systems. Furthermore, I’ve found it helpful to also version control the commands for instantiating containers in your text file.

The most obvious step in a Docker system cleanup is deleting unused containers. Specifically, this means that the delete command permanently removes all instances of Docker containers that are not running (i.e., not active). If you want to perform a clean slate on a Jenkins build node before deployment, you can first terminate all containers running on the machine with a single command.

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

The -f parameter suppresses the confirmation prompt, making it ideal for automated scripts. Deleting containers frees up relatively little disk space. The main resource drain comes from downloaded images, which can also be removed with a single command. However, before images can be deleted, it must first be ensured that they are not in use by any containers (even inactive ones). Removing unused containers offers another practical advantage: it releases ports blocked by containers. A port in a host environment can only be bound to a container once, which can quickly lead to error messages. Therefore, we extend our script to include the option to delete all Docker images not currently used by containers.

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

Another consequence of our efforts concerns Docker layers. For performance reasons, especially in CI environments, you should avoid using them. Docker volumes, on the other hand, are less problematic. When you remove the volumes, only the references in Docker are deleted. The folders and files linked to the containers remain unaffected. The -a parameter deletes all Docker volumes.

docker volume prune -a -f

Another area affected by our cleanup efforts is the build cache. Especially if you’re experimenting with creating new Dockerfiles, it can be very useful to manually clear the cache from time to time. This prevents incorrectly created layers from persisting in the builds and causing unusual errors later in the instantiated container. The corresponding command is:

docker buildx prune -f

The most radical option is to release all unused resources. There is also an explicit shell command for this.

docker volume prune -a -f

We can, of course, also use the commands just presented for CI build environments like Jenkins or GitLab CI. However, this might not necessarily lead to the desired result. A proven approach for Continuous Integration/Continuous Deployment is to set up your own Docker registry where you can deploy your self-built images. This approach provides a good backup and caching system for the Docker images used. Once correctly created, images can be conveniently deployed to different server instances via the local network without having to constantly rebuild them locally. This leads to a proven approach of using a build node specifically optimized for Docker images/containers to optimally test the created images before use. Even on cloud instances like Azure and AWS, you should prioritize good performance and resource efficiency. Costs can quickly escalate and seriously disrupt a stable project.

In this article, we have seen that in-depth knowledge of the tools used offers several opportunities for cost savings. The motto “We do it because we can” is particularly unhelpful in a commercial environment and can quickly degenerate into an expensive waste of resources.

Privacy

I constantly encounter statements like, “I use Apple because of the data privacy,” or “There are no viruses under Linux,” and so on and so forth. In real life, I just chuckle to myself and refrain from replying. These people are usually devotees of a particular brand, which they worship and would even defend with their lives. Therefore, I save my energy for more worthwhile things, like writing this article.

My aim is to use as few technical details and jargon as possible so that people without a technical background can also access this topic. Certainly, some skeptics might demand proof to support my claims. To them, I say that there are plenty of keywords for each statement that you can use to search for yourself and find plenty of primary sources that exist outside of AI and Wikipedia.

When one ponders what freedom truly means, one often encounters statements like: “Freedom is doing what you want without infringing on the freedom of others.” This definition also includes the fact that confidential information should remain confidential. However, efforts to maintain this confidentiality existed long before the availability of electronic communication devices. It is no coincidence that there is an age-old art called cryptography, which renders messages transmitted via insecure channels incomprehensible to the uninitiated. The fact that the desire to know other people’s thoughts is very old is also reflected in the saying that the two oldest professions of humankind are prostitution and espionage. Therefore, one might ask: Why should this be any different in the age of communication?

Particularly thoughtless individuals approach the topic with the attitude that they have nothing to hide anyway, so why should they bother with their own privacy? I personally belong to the group of people who consider this attitude very dangerous, as it opens the floodgates to abuse by power-hungry groups. Everyone has areas of their life that they don’t want dragged into the public eye. These might include specific sexual preferences, infidelity to a partner, or a penchant for gambling—things that can quickly shatter a seemingly perfect facade of moral integrity.

In East Germany, many people believed they were too insignificant for the notorious domestic intelligence service, the Stasi, to be interested in them. The opening of the Stasi files after German reunification demonstrated just how wrong they were. In this context, I would like to point out the existing legal framework in the EU, which boasts achievements such as hate speech laws, chat monitoring, and data retention. The private sector also has ample reason to learn more about every individual. This allows them to manipulate people effectively and encourage them to purchase services and products. One goal of companies is to determine the optimal price for their products and services, thus maximizing profit. This is achieved through methods of psychology. Or do you really believe that products like a phone that can take photos are truly worth the price they’re charged? So we see: there are plenty of reasons why personal data can indeed be highly valuable. Let’s therefore take a look at the many technological half-truths circulating in the public sphere. I’ve heard many of these half-truths from technology professionals themselves, who haven’t questioned many things.

Before I delve into the details, I’d like to make one essential point. There is no such thing as secure and private communication when electronic devices are involved. Anyone wanting to have a truly confidential conversation would have to go to an open field in strong winds, with a visibility of at least 100 meters, and cover their mouth while speaking. Of course, I realize that microphones could be hidden there as well. This statement is meant to be illustrative and demonstrates how difficult it is to create a truly confidential environment.

Let’s start with the popular brand Apple. Many Apple users believe their devices are particularly secure. This is only true to the extent that strangers attempting to gain unauthorized access to the devices face significant obstacles. The operating systems incorporate numerous mechanisms that allow users to block applications and content, for example, on their phones.

Microsoft is no different and goes several steps further. Ever since the internet became widely available, there has been much speculation about what telemetry data users send to the parent company via Windows. Windows 11 takes things to a whole new level, recording every keystroke and taking a screenshot every few seconds. Supposedly, this data is only stored locally on the computer. Of course, you can believe that if you like, but even if it were true, it’s a massive security vulnerability. Any hacker who compromises a Windows 11 computer can then read this data and gain access to online banking and all sorts of other accounts.

Furthermore, Windows 11 refuses to run on supposedly outdated processors. The fact that Windows has always been very resource-intensive is nothing new. However, the reason for the restriction to older CPUs is different. Newer generation CPUs have a so-called security feature that allows the computer to be uniquely identified and deactivated via the internet. The key term here is Pluton Security Processor with the Trusted Platform Module (TPM 2.0).

The extent of Microsoft’s desire to collect all possible information about its users is also demonstrated by the changes to its terms and conditions around 2022. These included a new section granting Microsoft permission to use all data obtained through its products to train artificial intelligence. Furthermore, Microsoft reserves the right to exclude users from all Microsoft products if hate speech is detected.

But don’t worry, Microsoft isn’t the only company with such disclaimers in its terms and conditions. Social media platforms like Meta, better known for its Facebook and WhatsApp products, and the communication platform Zoom also operate similarly. The list of such applications is, of course, much longer. Everyone is invited to imagine the possibilities that the things already described offer.

I’ve already mentioned Apple as problematic in the area of security and privacy. But Android, Google’s operating system for smart TVs and phones, also gives enormous scope for criticism. It’s not entirely without reason that you can no longer remove the batteries from these phones. Android behaves just like Windows and sends all sorts of telemetry data to its parent company. Add to that the scandal involving manufacturer Samsung, which came to light in 2025. They had a hidden Israeli program called AppCloud on their devices, the purpose of which can only be guessed at. Perhaps it’s also worth remembering when, in 2023, pagers exploded for many Palestinians and other people declared enemies by Israel. It’s no secret in the security community that Israel is at the forefront of cybersecurity and cyberattacks.

Another issue with phones is the use of so-called messengers. Besides well-known ones like WhatsApp and Telegram, there are also a few niche solutions like Signal and Session. All these applications claim end-to-end encryption for secure communication. It’s true that hackers have difficulty accessing information when they only intercept network traffic. However, what happens to the message after successful transmission and decryption on the target device is a different matter entirely. How else can the meta terms and conditions, with their already included clauses, be explained?

Considering all the aforementioned facts, it’s no wonder that many devices, such as Apple, Windows, and Android, have implemented forced updates. Of course, not everything is about total control. The issue of resilience, which allows devices to age prematurely in order to replace them with newer models, is another reason.

Of course, there are also plenty of options that promise their users exceptional security. First and foremost is the free and open-source operating system Linux. There are many different Linux distributions, and not all of them prioritize security and privacy equally. The Ubuntu distribution, published by Canonical, regularly receives criticism. For example, around 2013, the Unity desktop was riddled with ads, which drew considerable backlash. The notion that there are no viruses under Linux is also a myth. They certainly exist, and the antivirus scanner for Linux is called ClamAV; however, its use is less widespread due to the lower number of home installations compared to Windows. Furthermore, Linux users are still often perceived as somewhat nerdy and less likely to click on suspicious links. But those who have installed all the great applications like Skype, Dropbox, AI agents, and so on under Linux don’t actually have any improved security compared to the Big Tech industry.

The situation is similar with so-called “debugged” smartphones. Here, too, the available hardware, which is heavily regulated, is a problem. But everyday usability also often reveals limitations. These limitations are already evident within families and among friends, who are often reliant on WhatsApp and similar apps. Even online banking can present significant challenges, as banks, for security reasons, only offer their apps through the verified Google Play Store.

As you can see, this topic is quite extensive, and I haven’t even listed all the points, nor have I delved into them in great depth. I hope, however, that I’ve been able to raise awareness, at least to the point that smartphones shouldn’t be taken everywhere, and that more time should be spent in real life with other people, free from all these technological devices.

Harvest Time

Installing Python programs via PIP on Linux

Many years ago, the scripting language Python, named after the British comedy troupe, replaced the venerable Perl on Linux. This means that every Linux distribution includes a Python interpreter by default. A pretty convenient thing, really. Or so it seems! If it weren’t for the pesky issue of security. But let’s start at the beginning, because this short article is intended for people who want to run software written in Python on Linux, but who don’t know Python or have any programming experience. Therefore, a little background information to help you understand what this is all about.

All current Linux distributions derived from Debian, such as Ubuntu, Mint, and so on, throw a cryptic error when you try to install a Python program. To prevent important system libraries written in Python from being overwritten by the installation of additional programs and causing malfunctions in the operating system, a safeguard has been built in. Unfortunately, as is so often the case, the devil is in the details.

ed@P14s:~$ python3 -m pip install ansible

error: externally-managed-environment

× This environment is externally managed

╰─> To install Python packages system-wide, try apt install

python3-xyz, where xyz is the package you are trying to

install.

If you wish to install a non-Debian-packaged Python package,

create a virtual environment using python3 -m venv path/to/venv.

Then use path/to/venv/bin/python and path/to/venv/bin/pip. Make

sure you have python3-full installed.

If you wish to install a non-Debian packaged Python application,

it may be easiest to use pipx install xyz, which will manage a

virtual environment for you. Make sure you have pipx installed.

See /usr/share/doc/python3.13/README.venv for more information.As a solution, a virtual environment will now be set up. Debian 12, and also Debian 13, which was just released in August 2025, use Python version 3. Python 2 and Python 3 are not compatible with each other. This means that programs written in Python 2 will not run in Python 3 without modification.

If you want to install any program in Python, this is done by the so-called package manager. Most programming languages have such a mechanism. The package manager for Python is called PIP. This is where the first complications arise. There are pip, pip3, and pipx. Such naming inconsistencies can also be found with the Python interpreter itself. Version 2 is started on the console with python, and version 3 with python3. Since this article refers to Debian 12 / Debian 13 and its derivatives, we know that at least Python 3 is used. To find out the actual Python version, you can also enter python3 -V in the shell, which shows version 3.13.5 in my case. If you try python or python2, you get an error message that the command could not be found.

Let’s first look at what pip, pip3, and pipx actually mean. PIP itself simply stands for Package Installer for Python [1]. Up to Python 2, PIP was used, and from version 3 onwards, we have PIP3. PIPX [2] is quite special and designed for isolated environments, which is exactly what we need. Therefore, the next step is to install PIPX. We can easily do this using the Linux package manager: sudo apt install pipx. To determine which PIP version is already installed on the system, we need the following command: python3 -m pipx --version, which in my case outputs 1.7.1. This means that I have the original Python 3 installed on my system, along with PIPX.

With this prerequisite, I can now install all possible Python modules using PIPX. The basic command is pipx install <module>. To create a practical example, we will now install Ansible. The use of pip and pip3 should be avoided, as they require installation and can lead to the cryptic error mentioned earlier.

Ansible [3] is a program written in Python and migrated to Python 3 starting with version 2.5. Here’s a brief overview of what Ansible itself is. Ansible belongs to the class of configuration management programs and allows for the fully automated provisioning of systems using a script. Provisioning can be performed, for example, as a virtual machine and includes setting hardware resources (RAM, HDD, CPU cores, etc.), installing the operating system, configuring the user, and installing and configuring other programs.

First, we need to install Ansible with pipx install ansible. Once the installation is complete, we can verify its success with pipx list, which in my case produced the following output:

ed@local:~$ pipx list

venvs are in /home/ed/.local/share/pipx/venvs

apps are exposed on your $PATH at /home/ed/.local/bin

manual pages are exposed at /home/ed/.local/share/man

package ansible 12.1.0, installed using Python 3.13.5

- ansible-communityThe installation isn’t quite finished yet, as the command ansible --version returns an error message. The problem here is related to the Ansible edition. As we can see from the output of pipx list, we have the Community Edition installed. Therefore, the command is ansible-community --version, which currently shows version 12.2.0 for me.

If you prefer to type ansible instead of ansible-community in the console, you can do so using an alias. Setting the alias isn’t entirely straightforward, as parameters need to be passed to it. How to do this will be covered in another article.

Occasionally, Python programs cannot be installed via PIPX. One example is streamdeck-ui [4]. For a long time, Elgato’s StreamDeck hardware could be used under Linux with the Python-based streamdeck-ui. However, there is now an alternative called Boatswain, which is not written in Python and should be used instead. Unfortunately, installing streamdeck-ui results in an error due to its dependency on the ‘pillow’ library. If you try to use the installation script from the streamdeck-ui Git repository, you’ll find a reference to installing pip3, which is where streamdeck-ui can be obtained. When you then get to the point where you execute the command pip3 install --user streamdeck_ui, you’ll receive the error message “externally-managed-environment” that I described at the beginning of this article. Since we’re already using PIPX, creating another virtual environment for Python programs isn’t productive, as it will only lead to the same error with the pillow library.

As I’m not a Python programmer myself, but I do have some experience with complex dependencies in large Java projects, and I actually found the streamdeck-ui program to be better than Boatwain, I took a look around the GitHub repository. The first thing I noticed is that the last activity was in spring 2023, making reactivation rather unlikely. Nevertheless, let’s take a closer look at the error message to get an idea of how to narrow down the problem when installing other programs.

Fatal error from pip prevented installation. Full pip output in file:

/home/ed/.local/state/pipx/log/cmd_pip_errors.log

pip seemed to fail to build package:

'pillow'A look at the corresponding log file reveals that the dependency on pillow is defined as less than version 7 and greater than version 6.1, resulting in the use of version 6.2.2. Investigating what pillow actually does, we learn that it was a Python 2 library used for rendering graphics. The version used in streamdeck-ui is a fork of pillow for Python 3 and will be available in version 12 by the end of 2025. The problem could potentially be resolved by using a more recent version of pillow. However, this will most likely require adjustments to the streamdeck-ui code, as some incompatibilities in the used functions have probably existed since version 6.2.2.

This analysis shows that the probability of getting streamdeck-ui to run under pip3 is the same as with pipx. Anyone who gets the idea to downgrade to Python 2 just to get old programs running should try this in a separate, isolated environment, for example, using Docker. Python 2 hasn’t received any support through updates and security patches for many years, which is why installing it alongside Python 3 on your operating system is not a good idea.

So we see that the error message described at the beginning isn’t so cryptic after all if you simply use PIPX. If you still can’t get your program to install, a look at the error message will usually tell you that you’re trying to use an outdated and no longer maintained program.

Resources

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

Disk-Jock-Ey II

After discussing more general issues such as file systems and partitions in the first part of this workshop, we’ll turn to various diagnostic techniques in the second and final part of the series. Our primary tool for this will be Bash, as the following tools are all command-line based.

This section, too, requires the utmost care. The practices described here may result in data loss if used improperly. I assume no liability for any damages.

Let’s start with the possibility of finding out how much free space we still have on our hard drive. Anyone who occasionally messes around on servers can’t avoid the df command. After all, the SSH client doesn’t have a graphical interface, and you have to navigate the shell.

With df -hT, all storage, physical and virtual, can be displayed in human-readable format.

ed@local:~$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 32G 0 32G 0% /dev

tmpfs tmpfs 6.3G 2.8M 6.3G 1% /run

/dev/nvme0n1p2 ext4 1.8T 122G 1.6T 8% /

tmpfs tmpfs 32G 8.0K 32G 1% /dev/shm

efivarfs efivarfs 196K 133K 59K 70% /sys/firmware/efi/efivars

tmpfs tmpfs 5.0M 16K 5.0M 1% /run/lock

tmpfs tmpfs 1.0M 0 1.0M 0% /run/credentials/systemd-journald.service

tmpfs tmpfs 32G 20M 32G 1% /tmp

/dev/nvme0n1p1 vfat 975M 8.8M 966M 1% /boot/efi

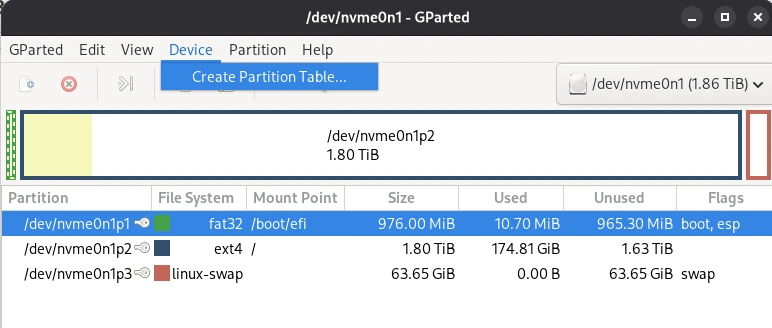

tmpfs tmpfs 6.3G 224K 6.3G 1% /run/user/1000As we can see in the output, the mount point / is an ext4 file system, with an NVMe SSD with a capacity of 1.8 TB, of which approximately 1.2 TB is still free. If other storage devices, such as external hard drives or USB drives, were present, they would also be included in the list. It certainly takes some practice to sharpen your eye for the relevant details. In the next step, we’ll practice a little diagnostics.

lsblk

If the output of df is too confusing, you can also use the lsblk tool, which provides a more understandable listing for beginners.

ed@local:~$ sudo lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 4K 1 loop /snap/bare/5

loop1 7:1 0 73.9M 1 loop /snap/core22/2139

loop2 7:2 0 516.2M 1 loop /snap/gnome-42-2204/226

loop3 7:3 0 91.7M 1 loop /snap/gtk-common-themes/1535

loop4 7:4 0 10.8M 1 loop /snap/snap-store/1270

loop5 7:5 0 50.9M 1 loop /snap/snapd/25577

loop6 7:6 0 73.9M 1 loop /snap/core22/2133

loop7 7:7 0 50.8M 1 loop /snap/snapd/25202

loop8 7:8 0 4.2G 0 loop

└─veracrypt1 254:0 0 4.2G 0 dm /media/veracrypt1

sda 8:0 1 119.1G 0 disk

└─sda1 8:1 1 119.1G 0 part

sr0 11:0 1 1024M 0 rom

nvme0n1 259:0 0 1.9T 0 disk

├─nvme0n1p1 259:1 0 976M 0 part /boot/efi

├─nvme0n1p2 259:2 0 1.8T 0 part /

└─nvme0n1p3 259:3 0 63.7G 0 part [SWAP]S.M.A.R.T

To thoroughly test our newly acquired storage before use, we’ll use the so-called S.M.A.R.T (Self-Monitoring, Analysis and Reporting Technology) tools. This can be done either with the Disks program introduced in the first part of this article or with more detailed information via Bash. With df -hT, we’ve already identified the SSD /dev/nvme0, so we can call smartctl.

ed@local:~$ sudo smartctl --all /dev/nvme0

smartctl 7.4 2023-08-01 r5530 [x86_64-linux-6.12.48+deb13-amd64] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Number: SAMSUNG MZVL22T0HDLB-00BLL

Serial Number: S75ZNE0W602153

Firmware Version: 6L2QGXD7

PCI Vendor/Subsystem ID: 0x144d

IEEE OUI Identifier: 0x002538

Total NVM Capacity: 2,048,408,248,320 [2.04 TB]

Unallocated NVM Capacity: 0

Controller ID: 6

NVMe Version: 1.3

Number of Namespaces: 1

Namespace 1 Size/Capacity: 2,048,408,248,320 [2.04 TB]

Namespace 1 Utilization: 248,372,908,032 [248 GB]

Namespace 1 Formatted LBA Size: 512

Namespace 1 IEEE EUI-64: 002538 b63101bf9d

Local Time is: Sat Oct 25 08:07:32 2025 CST

Firmware Updates (0x16): 3 Slots, no Reset required

Optional Admin Commands (0x0017): Security Format Frmw_DL Self_Test

Optional NVM Commands (0x0057): Comp Wr_Unc DS_Mngmt Sav/Sel_Feat Timestmp

Log Page Attributes (0x0e): Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg

Maximum Data Transfer Size: 128 Pages

Warning Comp. Temp. Threshold: 83 Celsius

Critical Comp. Temp. Threshold: 85 Celsius

Supported Power States

St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat

0 + 8.41W - - 0 0 0 0 0 0

1 + 8.41W - - 1 1 1 1 0 200

2 + 8.41W - - 2 2 2 2 0 200

3 - 0.0500W - - 3 3 3 3 2000 1200

4 - 0.0050W - - 4 4 4 4 500 9500

Supported LBA Sizes (NSID 0x1)

Id Fmt Data Metadt Rel_Perf

0 + 512 0 0

=== START OF SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

SMART/Health Information (NVMe Log 0x02)

Critical Warning: 0x00

Temperature: 37 Celsius

Available Spare: 100%

Available Spare Threshold: 10%

Percentage Used: 0%

Data Units Read: 43,047,167 [22.0 TB]

Data Units Written: 25,888,438 [13.2 TB]

Host Read Commands: 314,004,907

Host Write Commands: 229,795,952

Controller Busy Time: 2,168

Power Cycles: 1,331

Power On Hours: 663

Unsafe Shutdowns: 116

Media and Data Integrity Errors: 0

Error Information Log Entries: 0

Warning Comp. Temperature Time: 0

Critical Comp. Temperature Time: 0

Temperature Sensor 1: 37 Celsius

Temperature Sensor 2: 37 Celsius

Error Information (NVMe Log 0x01, 16 of 64 entries)

No Errors Logged

Self-test Log (NVMe Log 0x06)

Self-test status: No self-test in progress

No Self-tests Logged

A very useful source of information, especially if you’re planning to install a used drive. Fortunately, my system SSD shows no abnormalities after almost two years of use.

fdisk

The classic among hard drive programs is fdisk, which is also available for Windows systems. With fdisk, you can not only format drives but also extract some information. For this purpose, there are the -l parameter for list and -x for more details. The fdisk program is quite complex, and for formatting disks, I recommend the graphical versions Disks and Gparted, presented in the first part of this article. With the graphical interface, the likelihood of making mistakes is much lower than with the shell.

ed@local:~$ sudo fdisk -l

Disk /dev/nvme0n1: 1.86 TiB, 2048408248320 bytes, 4000797360 sectors

Disk model: SAMSUNG MZVL22T0HDLB-00BLL

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 347D3F20-0228-436D-9864-22A5D36039D9

Device Start End Sectors Size Type

/dev/nvme0n1p1 2048 2000895 1998848 976M EFI System

/dev/nvme0n1p2 2000896 3867305983 3865305088 1.8T Linux filesystem

/dev/nvme0n1p3 3867305984 4000796671 133490688 63.7G Linux swap

Disk /dev/sda: 119.08 GiB, 127865454592 bytes, 249737216 sectors

Disk model: Storage Device

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xa82a04bd

Device Boot Start End Sectors Size Id Type

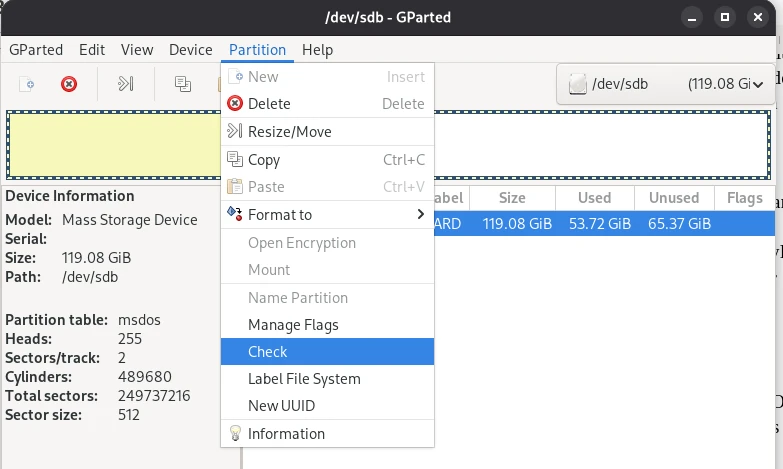

/dev/sda1 2048 249737215 249735168 119.1G 83 LinuxBasic repair with consistency check: fsck

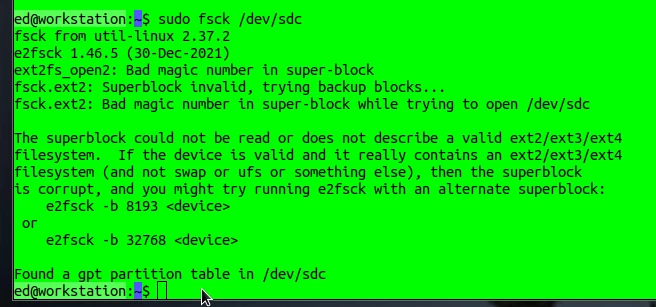

If, contrary to expectations, problems arise, you can use the fsck (File System Consistency Check) tool to check the file system and repair it if necessary. However, you must specify the relevant partition.

sudo fsck /dev/sdc

As you can see in the screenshot, some time ago I had a partition with a defective superblock, making it impossible to access the data. The reason for the error was a defective memory cell in the SSD. With a little effort, I was able to access the data and copy it to another storage device. This doesn’t always work. Therefore, I would like to give a little advice: always be well prepared before such rescue operations. This means having a sufficiently large, functioning target drive ready so that you can immediately create a backup if the operation is successful. Many of the operations presented here change the data on the storage device, and it is not certain whether subsequent access will be successful.

Linux sysadmin joke

Linux system administrators often recommend that beginners delete the French language files, which aren’t needed, to save space. To do this, type the command sudo rm -fr / in the console and press Enter. This should not be done under any circumstances, as the command deletes the entire hard drive. It is considered the most dangerous thing you can do in Linux. You initiate the deletion with rm, the parameters -f and -r stand for force and recursive, respectively, and the inconspicuous / refers to the root directory.

Fake Check

Sometimes it happens that you’ve purchased storage devices that claim a high capacity, but that capacity isn’t even close to being there. These are so-called fake devices. The problem with these fake devices is that the data written to the device for which there is no longer any capacity ends up in oblivion. Unfortunately, you don’t receive an error message and often only notice the problem when you want to access the data again at a later time.

A very unpleasant way to obtain fake devices is through an Amazon hack. To ensure reliable and fast shipping, Amazon offers its sellers the option of storing goods in its own fulfillment center. Sellers who use this option are also given priority on the Amazon website. The problem is that the same products all end up in the same box, which makes perfect sense. Criminals shamelessly exploit this situation and send their fake products to Amazon. Afterwards, it’s impossible to identify the original supplier.

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

Network Attached Storage (NAS)

Another scenario for dealing with mass storage under Linux involves so-called NAS hard drives, which are connected to the router via a network cable and are then available to all devices such as televisions, etc. To ensure that access to the files is only granted to authorized users, a password can be set. Affordable solutions for home use are available, for example, from Western Digital with its MyCloud product series. It would be very practical if you could automatically register your NAS during the boot process on your Linux system, so that it can be used immediately without further login. To do this, you need to determine the NAS URL, for example, from the user manual or via a network scan. Once you have all the necessary information such as the URL/IP, login name, and password, you can register the NAS with an entry in the /etc/fstab file. We already learned about the fstab file in the section on the SWAP file.

First, we install NFS support for Linux to ensure easy access to the file systems commonly used in NAS systems.

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

In the next step, we need to create a file that enables automatic login. We’ll call this file nas-login and save it to /etc/nas-login. The contents of this file are our login information.

user=accountname

password=s3cr3t

Finally, we edit the fstab file and add the following information as the last line:

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

The example is for a Western Digital MyCloud drive, accessible via the URL //wdmycloud.local/account. The account must be configured for the corresponding user. The mount point under Linux is /media/nas. In most cases, you must create this directory beforehand with sudo mkdir /media/nas. In the credentials, we enter the file with our login information /etc/nas-login. After a reboot, the NAS storage will be displayed in the file browser and can be used. Depending on the network speed, this can extend the boot process by a few seconds. It takes even longer if the computer is not connected to the home network and the NAS is unavailable. You can also build your own NAS with a Raspberry Pi, but that could be the subject of a future article.

Finally, I would like to say a few words about Western Digital’s service. I have already had two replacement devices, which were replaced by WD every time without any problems. Of course, I sent the service department my analysis in advance via screenshot, which ruled out any improper use on my part. The techniques presented in this article have helped me a great deal, which is why I came up with the idea of writing this text in the first place. I’ll leave it at that and hope the information gathered here is as helpful to you as it was to me.

Disk-Jock-Ey

Working with mass storage devices such as hard disks (HDDs), solid-state drives (SSDs), USB drives, memory cards, or network-attached storage devices (NAS) isn’t as difficult under Linux as many people believe. You just have to be able to let go of old habits you’ve developed under Windows. In this compact course, you’ll learn everything you need to master potential problems on Linux desktops and servers.

Before we dive into the topic in depth, a few important facts about the hardware itself. The basic principle here is: Buy cheap, buy twice. The problem isn’t even the device itself that needs replacing, but rather the potentially lost data and the effort of setting everything up again. I’ve had this experience especially with SSDs and memory cards, where it’s quite possible that you’ve been tricked by a fake product and the promised storage space isn’t available, even though the operating system displays full capacity. We’ll discuss how to handle such situations a little later, though.

Another important consideration is continuous operation. Most storage media are not designed to be switched on and used 24 hours a day, 7 days a week. Hard drives and SSDs designed for laptops quickly fail under constant load. Therefore, for continuous operation, as is the case with NAS systems, you should specifically look for such specialized devices. Western Digital, for example, has various product lines. The Red line is designed for continuous operation, as is the case with servers and NAS. It is important to note that the data transfer speed of storage media is generally somewhat lower in exchange for an increased lifespan. But don’t worry, we won’t get lost in all the details that could be said about hardware, and will leave it at that to move on to the next point.

A significant difference between Linux and Windows is the file system, the mechanism by which the operating system organizes access to information. Windows uses NTFS as its file system, while USB sticks and memory cards are often formatted in FAT. The difference is that NTFS can store files larger than 4 GB. FAT is preferred by device manufacturers for navigation systems or car radios due to its stability. Under Linux, the ext3 or ext4 file systems are primarily found. Of course, there are many other specialized formats, which we won’t discuss here. The major difference between Linux and Windows file systems is the security concept. While NTFS has no mechanism to control the creation, opening, or execution of files and directories, this is a fundamental concept for ext3 and ext4.

Storage devices formatted in NTFS or FAT can be easily connected to Linux computers, and their contents can be read. To avoid any risk of data loss when writing to network storage, which is often formatted as NTFS for compatibility reasons, the SAMBA protocol is used. Samba is usually already part of many Linux distributions and can be installed in just a few moments. No special configuration of the service is required.

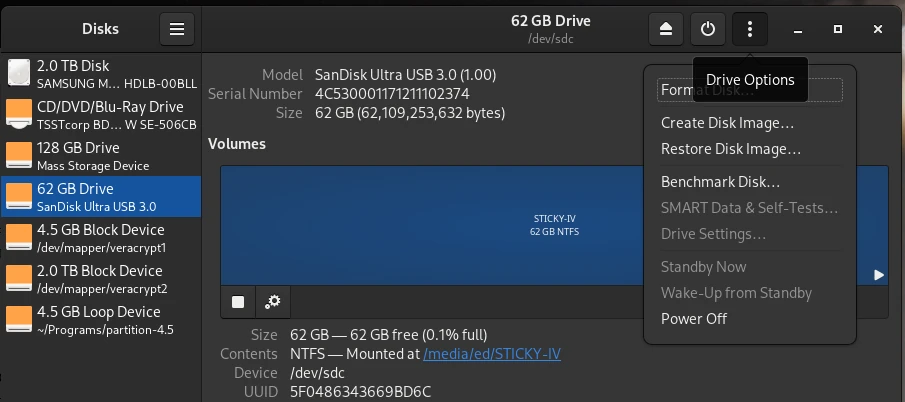

Now that we’ve learned what a file system is and what it’s used for, the question arises: how to format external storage in Linux? The two graphical programs Disks and Gparted are a good combination for this. Disks is a bit more versatile and allows you to create bootable USB sticks, which you can then use to install computers. Gparted is more suitable for extending existing partitions on hard drives or SSDs or for repairing broken partitions.

Before you read on and perhaps try to replicate one or two of these tips, it’s important that I offer a warning here. Before you try anything with your storage media, first create a backup of your data so you can fall back on it in case of disaster. I also expressly advise you to only attempt scenarios you understand and where you know what you’re doing. I assume no liability for any data loss.

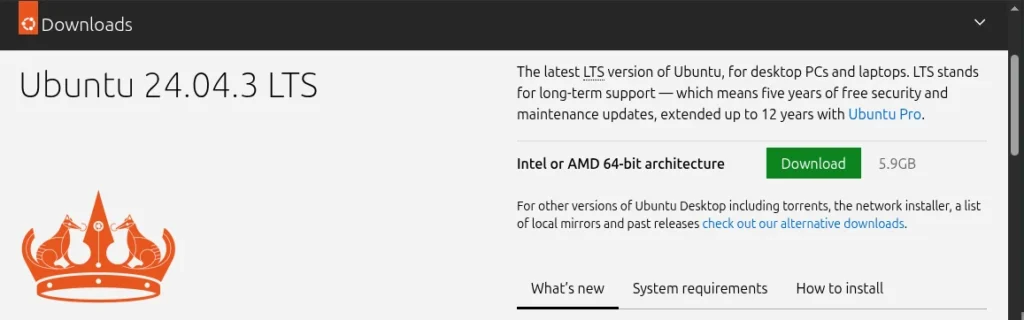

Bootable USB & Memory Cards with Disks

One scenario we occasionally need is the creation of bootable media. Whether it’s a USB flash drive for installing a Windows or Linux operating system, or installing the operating system on an SD card for use on a Raspberry Pi, the process is the same. Before we begin, we need an installation medium, which we can usually download as an ISO from the operating system manufacturer’s website, and a corresponding USB flash drive.

Next, open the Disks program and select the USB drive on which we want to install the ISO file. Then, click the three dots at the top of the window and select Restore Disk Image from the menu that appears. In the dialog that opens, select our ISO file for the Image to Restore input field and click Start Restoring. That’s all you need to do.

Repairing Partitions and MTF with Gparted

Another scenario you might encounter is that data on a flash drive, for example, is unreadable. If the data itself isn’t corrupted, you might be lucky and be able to solve the problem with GParted. In some cases, (A) the partition table may be corrupted and the operating system simply doesn’t know where to start. Another possibility is (B) the Master File Table (MFT) may be corrupted. The MTF contains information about the memory location in which a file is located. Both problems can be quickly resolved with GParted.

Of course, it’s impossible to cover the many complex aspects of data recovery in a general article.

Now that we know that a hard drive consists of partitions, and these partitions contain a file system, we can now say that all information about a partition and the file system formatted on it is stored in the partition table. To locate all files and directories within a partition, the operating system uses an index, the so-called Master File Table, to search for them. This connection leads us to the next point: the secure deletion of storage media.

Data Shredder – Secure Deletion

When we delete data on a storage medium, only the entry where the file can be found is removed from the MFT. The file therefore still exists and can still be found and read by special programs. Securely deleting files is only possible if we overwrite the free space multiple times. Since we can never know where a file was physically written on a storage medium, we must overwrite the entire free space multiple times after deletion. Specialists recommend three write processes, each with a different pattern, to make recovery impossible even for specialized labs. A Linux program that also sweeps up and deletes “data junk” is BleachBit.

Securely overwriting deleted files is a somewhat lengthy process, depending on the size of the storage device, which is why it should only be done sporadically. However, you should definitely delete old storage devices completely when they are “sorted out” and then either disposed of or passed on to someone else.

Mirroring Entire Hard Drives 1:1 – CloneZilla

Another scenario we may encounter is the need to create a copy of the hard drive. This is relevant when the existing hard drive or SSD for the current computer needs to be replaced with a new one with a higher storage capacity. Windows users often take this opportunity to reinstall their system to keep up with the practice. Those who have been working with Linux for a while appreciate that Linux systems run very stably and the need for a reinstallation only arises sporadically. Therefore, it is a good idea to copy the data from the current hard drive bit by bit to the new drive. This also applies to SSDs, of course, or from HDD to SSD and vice versa. We can accomplish this with the free tool CloneZilla. To do this, we create a bootable USB with CloneZilla and start the computer in the CloneZilla live system. We then connect the new drive to the computer using a SATA/USB adapter and start the data transfer. Before we open up our computer and swap the disks after finishing the installation, we’ll change the boot order in the BIOS and check whether our attempt was successful. Only if the computer boots smoothly from the new disk will we proceed with the physical replacement. This short guide describes the basic procedure; I’ve deliberately omitted a detailed description, as the interface and operation may differ from newer Clonezilla versions.

SWAP – The Paging File in Linux

At this point, we’ll leave the graphical user interface and turn to the command line. We’ll deal with a very special partition that sometimes needs to be expanded. It’s the SWAP file. The SWAP file is what Windows calls the swap file. This means that the operating system writes data that no longer fits into RAM to this file and can then read this data back into RAM more quickly when needed. However, it can happen that this swap file is too small and needs to be expanded. But that’s not rocket science, as we’ll see shortly.

Abonnement / Subscription

[English] This content is only available to subscribers.

[Deutsch] Diese Inhalte sind nur für Abonnenten verfügbar.

We’ve already discussed quite a bit about handling storage media under Linux. In the second part of this series, we’ll delve deeper into the capabilities of command-line programs and look, for example, at how NAS storage can be permanently mounted in the system. Strategies for identifying defective storage devices will also be the subject of the next part. I hope I’ve piqued your interest and would be delighted if you would share the articles from this blog.

Pathfinder

So that we can call console programs directly across the system without having to specify the full path, we use the so-called path variable. So we save the entire path including the executable program, the so-called executable, in this path variable so that we no longer have to specify the path including the executable on the command line. By the way, the word executable derives the file extension exe, which is common in Windows. Here we also have a significant difference between the two operating systems Windows and Linux. While Windows knows whether it is a pure ASCII text file or an executable file via the file extension such as exe or txt, Linux uses the file’s meta information to make this distinction. That’s why it’s rather unusual to use these file extensions txt and exe under Linux.

Typical use cases for setting the path variable are programming languages such as Java or tools such as the Maven build tool. For example, if we downloaded Maven from the official homepage, we can unpack the program anywhere on our system. On Linux the location could be /opt/maven and on Microsoft Windows it could be C:/Program Files/Maven. In this installation directory there is a subdirectory /bin in which the executable programs are located. The executable for Maven is called mvn and in order to output the version, under Linux without the entry in the path variable the command would be as follows: /opt/maven/bin/mvn -v. So it’s a bit long, as we can certainly admit. Entering the Maven installation directory in the path shortens the entire command to mvn -v. By the way, this mechanism applies to all programs that we use as a command in the console.

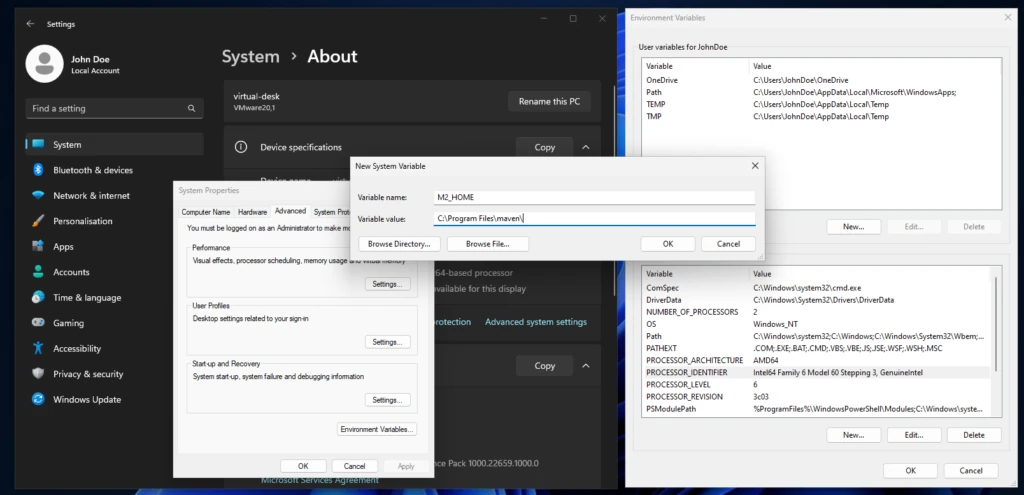

Before I get to how the path variable can be adjusted under Linux and Windows, I would like to introduce another concept, the system variable. System variables are global variables that are available to us in Bash. The path variable also counts as a system variable. Another system variable is HOME, which points to the logged in user’s home directory. System variables are capitalized and words are separated with an underscore. For our example with entering the Maven Executable in the path, we can also set our own system variable. The M2_HOME convention applies to Maven and JAVA_HOME applies to Java. As a best practice, you bind the installation directory to a system variable and then use the self-defined system variable to expand the path. This approach is quite typical for system administrators who simplify their server installation using system variables. Because these system variables are global and can also be read by automation scripts.

The command line, also known as shell, bash, console and terminal, offers an easy way to output the value of the system variable with echo. Using the example of the path variable, we can immediately see the difference to Linux and Windows. Linux: echo $PATH Windows: echo %PATH%

ed@local:~$ echo $PATH

/usr/local/bin:/usr/bin:/bin:/usr/local/games:/usr/games:/snap/bin:/home/ed/Programs/maven/bin:/home/ed/.local/share/gem//bin:/home/ed/.local/bin:/usr/share/openjfx/libLet’s start with the simplest way to set the path variable. In Linux we just need to edit the hidden .bashrc file. At the end of the file we add the following lines and save the content.

export M2_HOME="/opt/maven" export PATH=$PATH:$M2_HOME/bin

We bind the installation directory to the M2_HOME variable. We then expand the path variable to include the M2_HOME system variable with the addition of the subdirectory of executable files. This procedure is also common on Windows systems, as it allows the installation path of an application to be found and adjusted more quickly. After modifying the .bashrc file, the terminal must be restarted for the changes to take effect. This procedure ensures that the entries are not lost even after the computer is restarted.

Under Windows, the challenge is simply to find the input mask where the system variables can be set. In this article I will limit myself to the version for Windows 11. It may of course be that the way to edit the system variables has changed in a future update. There are slight variations between the individual Windows versions. The setting then applies to both the CMD and PowerShell. The screenshot below shows how to access the system settings in Windows 11.

To do this, we right-click on an empty area on the desktop and select the System entry. In the System – About submenu you will find the system settings, which open the System properties popup. In the system settings we press the Environment Variables button to get the final input mask. After making the appropriate adjustments, the console must also be restarted for the changes to take effect.

In this little help, we learned about the purpose of system variables and how to store them permanently on Linux and Windows. We can then quickly check the success of our efforts in the shell using echo by outputting the contents of the variables. And we are now one step closer to becoming an IT professional.

PHP Elegant Testing with Laravel

The PHP programming language has been the first choice for many developers in the field of web applications for decades. Since the introduction of object-oriented language features with version 5, PHP has come of age. Large projects can now be implemented in a clean and, above all, maintainable architecture. A striking difference between commercial software development and a hobbyist who has assembled and maintains a club’s website is the automated verification that the application adheres to specified specifications. This brings us into the realm of automated software testing.

A key principle of automated software testing is that it verifies, without additional interaction, that the application exhibits a predetermined behavior. Software tests cannot guarantee that an application is error-free, but they do increase quality and reduce the number of potential errors. The most important aspect of automated software testing is that behavior already defined in tests can be quickly verified at any time. This ensures that if developers extend an existing function or optimize its execution speed, the existing functionality is not affected. In short, we have a powerful tool for ensuring that we haven’t broken anything in our code without having to laboriously click through all the options manually each time.

To be fair, it’s also worth mentioning that the automated tests have to be developed, which initially takes time. However, this ‘supposed’ extra effort quickly pays off once the test cases are run multiple times to ensure that the status quo hasn’t changed. Of course, the created test cases also have to be maintained.

If, for example, an error is detected, you first write a test case that replicates the error. The repair is then successfully completed if the test case(s) pass. However, changes in the behavior of existing functionality always require corresponding adaptation of the associated tests. This concept of writing tests in parallel to implement the function is feasible in many programming languages and is called test-driven development. From my own experience, I recommend taking a test-driven approach even for relatively small projects. Small projects often don’t have the complexity of large applications, which also require some testing skills. In small projects, however, you have the opportunity to develop your skills within a manageable framework.

Test-driven software development is nothing new in PHP either. Sebastian Bergmann’s unit testing framework PHPUnit has been around since 2001. The PEST testing framework, released around 2021, builds on PHPUnit and extends it with a multitude of new features. PEST stands for PHP Elegant Testing and defines itself as a next-generation tool. Since many agencies, especially smaller ones, that develop their software in PHP generally limit themselves to manual testing, I would like to use this short article to demonstrate how easy it is to use PEST. Of course, there is a wealth of literature on the topic of test-driven software development, which focuses on how to optimally organize tests in a project. This knowledge is ideal for developers who have already taken their first steps with testing frameworks. These books teach you how to develop independent, low-maintenance, and high-performance tests with as little effort as possible. However, to get to this point, you first have to overcome the initial hurdle: installing the entire environment.

A typical environment for self-developed web projects is the Laravel framework. When creating a new Laravel web project, you can choose between PHPUnit and PEST. Laravel takes care of all the necessary details. A functioning PHP environment is required as a prerequisite. This can be a Docker container, a native installation, or the XAMPP server environment from Apache Friends. For our short example, I’ll use the PHP CLI on Debian Linux.

sudo apt-get install php-cli php-mbstring php-xml php-pcov

After executing the command in the console, you can test the installation success using the php -v command. The next step is to use a package manager to deploy other PHP libraries for our application. Composer is one such package manager. It can also be quickly deployed to the system with just a few instructions.

php -r "copy('https://getcomposer.org/installer', 'composer-setup.php');"

php -r "if (hash_file('sha384', 'composer-setup.php') === 'ed0feb545ba87161262f2d45a633e34f591ebb3381f2e0063c345ebea4d228dd0043083717770234ec00c5a9f9593792') { echo 'Installer verified'.PHP_EOL; } else { echo 'Installer corrupt'.PHP_EOL; unlink('composer-setup.php'); exit(1); }"

php composer-setup.php

php -r "unlink('composer-setup.php');"This downloads the current version of the composer.phar file to the current directory in which the command is executed. The correct hash is also automatically checked. To make Composer globally available via the command line, you can either include the path in the path variable or link composer.phar to a directory whose path is already integrated into Bash. I prefer the latter option and achieve this with:

ln -d composer.phar $HOME/.local/bin/composer

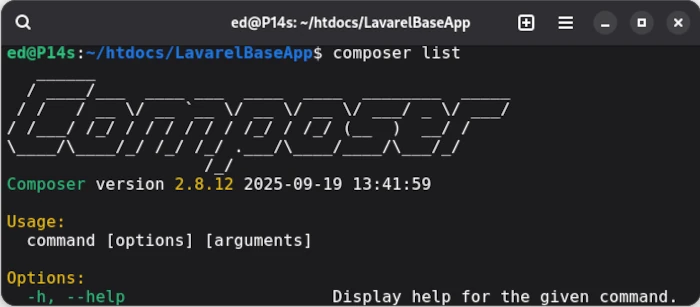

If everything was executed correctly, composer list should now display the version, including the available commands. If this is the case, we can install the Lavarel installer globally in the Composer repository.

php composer global require laravel/installer

To install Lavarel via Bash, the path variable COMPOSER_HOME must be set. To find out where Composer created the repository, simply use the command composer config -g home. The resulting path, which in my case is /home/ed/.config/composer, is then bound to the variable COMPOSER_HOME. We can now run

php $COMPOSER_HOME/vendor/bin/laravel new MyApp

in an empty directory to create a new Laravel project. The corresponding console output looks like this:

ed@P14s:~/Downloads/test$ php $COMPOSER_HOME/vendor/bin/laravel new MyApp

_ _

| | | |

| | __ _ _ __ __ ___ _____| |

| | / _` | __/ _` \ \ / / _ \ |

| |___| (_| | | | (_| |\ V / __/ |

|______\__,_|_| \__,_| \_/ \___|_|

┌ Which starter kit would you like to install? ────────────────┐

│ None │

└──────────────────────────────────────────────────────────────┘

┌ Which testing framework do you prefer? ──────────────────────┐

│ Pest │

└──────────────────────────────────────────────────────────────┘

Creating a "laravel/laravel" project at "./MyApp"

Installing laravel/laravel (v12.4.0)

- Installing laravel/laravel (v12.4.0): Extracting archive

Created project in /home/ed/Downloads/test/MyApp

Loading composer repositories with package information

The directory structure created in this way contains the tests folder, where the test cases are stored, and the phpunit.xml file, which contains the test configuration. Laravel defines two test suites: Unit and Feature, each of which already contains a demo test. To run the two demo test cases, we use the artisan command-line tool [1] provided by Laravel. To run the tests, simply enter the php artisan test command in the root directory.

In order to assess the quality of the test cases, we need to determine the corresponding test coverage. We also obtain the coverage using artisan with the test statement, which is supplemented by the --coverage parameter.

php artisan test --coverage

The output for the demo test cases provided by Laravel is as follows:

Unfortunately, artisan’s capabilities for executing test cases are very limited. To utilize PEST’s full functionality, the PEST executor should be used right from the start.

php ./vendor/bin/pest -h

The PEST executor can be found in the vendor/bin/pest directory, and the -h parameter displays help. In addition to this detail, we’ll focus on the tests folder, which we already mentioned. In the initial step, two test suites are preconfigured via the phpunit.xml file. The test files themselves should end with the suffix Test, as in the ExampleTest.php example.

Compared to other test suites, PEST attempts to support as many concepts of automated test execution as possible. To maintain clarity, each test level should be stored in its own test suite. In addition to classic unit tests, browser tests, stress tests, architecture tests, and even the newly emerging mutation testing are supported. Of course, this article can’t cover all aspects of PEST, and there are now many high-quality tutorials available for writing classic unit tests in PEST. Therefore, I’ll limit myself to an overview and a few less common concepts.

Architecture test

The purpose of architectural tests is to provide a simple way to verify whether developers are adhering to the specifications. This includes, among other things, ensuring that classes representing data models are located in a specified directory and may only be accessed via specialized classes.

test('models')

->expect('App\Models')

->toOnlyBeUsedOn('App\Repositories')

->toOnlyUse('Illuminate\Database');

Mutation-Test

This form of testing is something new. The purpose of the exercise is to create so-called mutants by making changes, for example, to the conditions of the original implementation. If the tests assigned to the mutants continue to run correctly instead of failing, this can be a strong indication that the test cases may be faulty and lack meaningfulness.

Original: if(TRUE) → Mutant: if(FALSE)

Stress-Test

Another term for stress tests is penetration testing, which focuses specifically on the performance of an application. This allows you to ensure that the web app, for example, can handle a defined number of accesses.

Of course, there are many other helpful features available. For example, you can group tests and then run the groups individually.

// definition

pest()->extend(TestCase::class)

->group('feature')

->in('Feature');

// calling

php ./vendor/bin/pest --group=feature

For those who don’t work with the Lavarel framework but still want to test in PHP with PEST, you can also integrate the PEST framework into your application. All you need to do is define PEST as a corresponding development dependency in the Composer project configuration. Then, you can initiate the initial test setup in the project’s root directory.

php ./vendor/bin/pest --init

As we’ve seen, the options briefly presented here alone are very powerful. The official PEST documentation is also very detailed and should generally be your first port of call. In this article, I focused primarily on minimizing the entry barriers for test-driven development in PHP. PHP now also offers a wealth of options for implementing commercial software projects very efficiently and reliably.

Ressourcen

- [1] Artisan Cheat Sheat: https://artisan.page

- [2] PEST Homepage: https://pestphp.com

Take professional screenshots

Over the course of the many hours they spend in front of this amazing device, almost every computer user will find themselves in need of saving the screen content as a graphic. The process of creating an image of the monitor’s contents is what seasoned professionals call taking a screenshot.

As with so many things, there are many ways to achieve a screenshot. Some very resourceful people solve the problem by simply pointing their smartphone at the monitor and taking a photo. Why not? As long as you can still recognize something afterwards, everything’s fine. But this short guide doesn’t end there; we’ll take a closer look at the many ways to create screenshots. Even professionals who occasionally write instructions, for example, have to overcome one or two pitfalls.

Before we get to the nitty-gritty, it’s important to mention that it makes a difference whether you want to save the entire screen, the browser window, or even the invisible area of a website as a screenshot. The solution presented for the web browser works pretty much the same for all web browsers on all operating systems. Screenshots intended to cover the monitor area and not a web page use the technologies of the existing operating system. For this reason, we also differentiate between Linux and Windows. Let’s start with the most common scenario: browser screenshots.

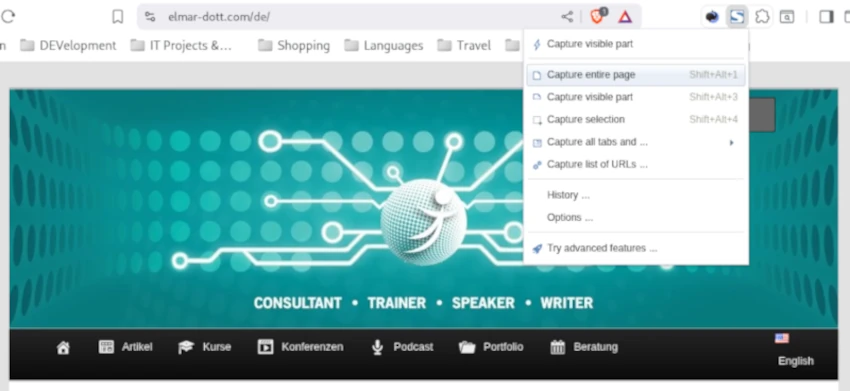

Browser

Especially when ordering online, many people feel more comfortable when they can additionally document their purchase with a screenshot. It’s also not uncommon to occasionally save instructions from a website for later use. When taking screenshots of websites, one often encounters the problem that a single page is longer than the area displayed on the monitor. Naturally, the goal is to save the entire content, not just the displayed area. For precisely this case, our only option is a browser plugin.

Fireshot is a plug-in available for all common browsers, such as Brave, Firefox, and Microsoft Edge, that allows us to create screenshots of websites, including hidden content. Fireshot is a browser extension that has been on the market for a very long time. Fireshot comes with a free version, which is already sufficient for the scenario described. Anyone who also needs an image editor when taking screenshots, for example, to highlight areas and add labels, can use the paid Pro version. The integrated editor has the advantage of significantly accelerating workflows in professional settings, such as when creating manuals and documentation. Of course, similar results can be achieved with an external photo editor like GIMP. GIMP is a free image editing program, similarly powerful and professional as the paid version of Photoshop, and is available for Windows and Linux.

Linux

If we want to take screenshots outside of the web browser, we can easily use the operating system’s built-in tools. In Linux, you don’t need to install any additional programs; everything you need is already there. Pressing the Print key on the keyboard opens the tool. You simply have to drag the mouse around the area you want to photograph and press Capture in the control field that appears. It’s not a problem if the control area is in the visible area of the screenshot; it won’t be shown in the screenshot. On German keyboards, you often find the Print key instead of Print. The finished screenshot then ends up in the Screenshots folder with a timestamp in the file name. This folder is a subfolder of Pictures in the user directory.

Windows

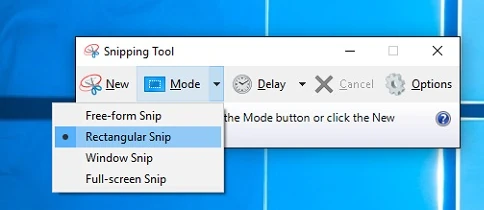

The easiest way to take screenshots in Windows is to use the Snipping Tool, which is usually included with your Windows installation. It’s also intuitive to use.

Another very old way in Windows, without a dedicated screenshot creation program, is to press the Ctrl and Print Screen keys simultaneously. Then, open a graphics program, such as Paint, which is included in every Windows installation. In the drawing area, press Ctrl + V simultaneously, and the screenshot appears and can be edited immediately.

These screenshots are usually created in JPG format. JPG is a lossy compression method, so you should check the readability after taking the screenshot. Especially with current monitors with resolutions around 2000 pixels, using the image on a website requires manual post-processing. One option is to reduce the resolution from just under 2000 pixels to the usual 1000 pixels on a website. Ideally, the scaled and edited graphic should be saved in the new WEBP format. WEBP is a lossless graphics compression method that further reduces the file size compared to JPG, which is very beneficial for website loading times.

This already covers a good range of possibilities for taking screenshots. Of course, more could be said about this, but that falls into the realm of graphic design and the efficient use of image editing software.